With the advancement of machine learning, deep learning, and neural networks, applying AI to edge computing has seemed natural. Artificial intelligence is seen by most as safe for humans and not something to be feared.

The current trend is moving towards greater Internet of Things (IoT) and cloud adoption, creating a growing need for edge computing. Collecting data at the source or edge of the network is far more effective than storing it in a bigger server for processing.This saves on bandwidth and allows for faster access to data.

What exactly is the definition of artificial intelligence in edge computing? Also, what are the benefits? What use cases are there? And how it differentiates from cloud?

Let’s figure it all out!

What is Edge AI?

Edge AI is a subset of machine learning that focuses on optimizing the performance of artificial intelligence (AI) applications at the edge of a network.

The term “edge” refers to devices near the end-user or customer, such as smartphones, tablets, laptops, or sensors.In contrast, AI models running in the cloud typically access more computing resources and data than those running on edge devices.

The market for Edge AI hardware is anticipated to increase from 1,056 million units in 2022 to 2,716 million units by 2027, growing at a CAGR of 20.8% over the forecast period.

Edge AI attempts to overcome resource limitations by applying machine learning algorithms to local data sets rather than sending all data to the cloud for processing. This approach results in faster response times and smaller network bandwidth requirements because only relevant information is transmitted from the edge device back to the cloud.

How Edge AI Works in Simple Terms

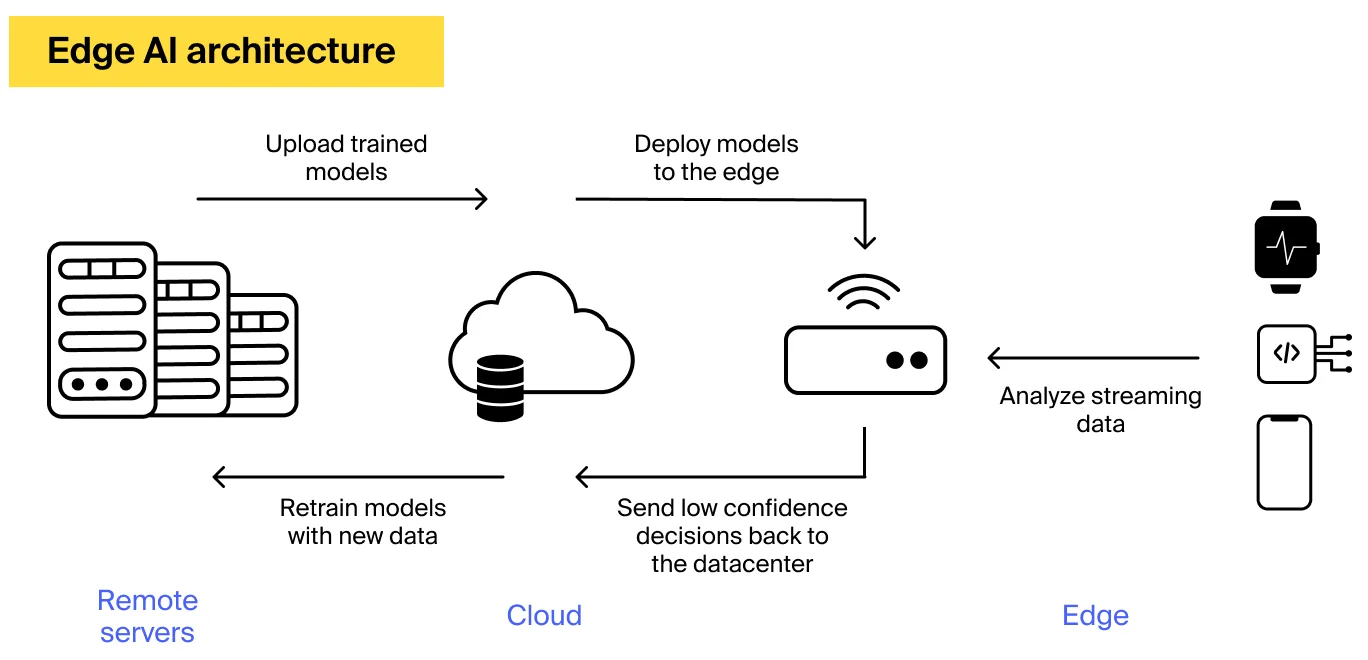

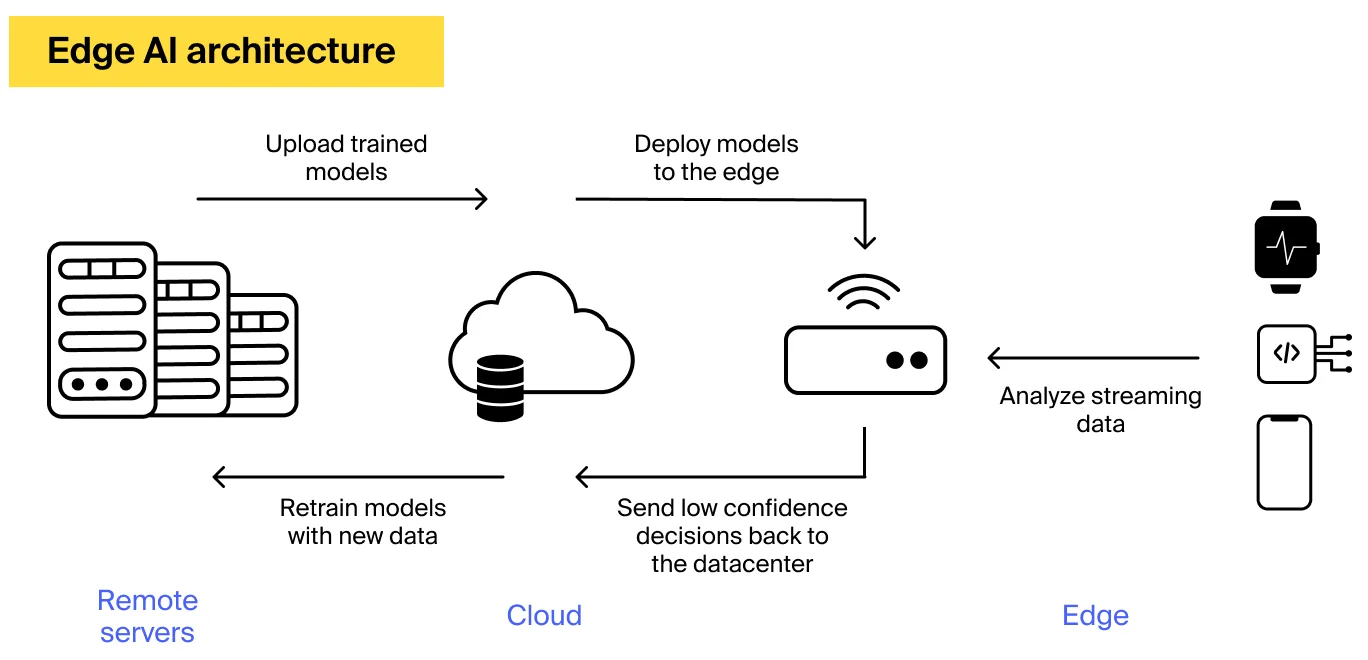

Even though some people might think otherwise, a regular Edge AI system usually works by combining two things:

- Devices at the edge (like phones or sensors) make decisions using incoming data,

- A big computer center (often in the cloud) helps make these decisions better over time.

Imagine if these AI systems must understand human speech or drive cars – they must be smart, almost like humans. They use special computer programs called deep learning algorithms, AI, to make this happen.

First, these algorithms are trained often using a ton of data and powerful computers in the cloud. It’s like teaching them many examples until they become good at a task. After this training, they are sent to small devices at the edge, like your phone, where they work independently.

If these programs encounter a problem or make a mistake, they send a message to the big cloud computers. These cloud computers then help fix the problem by giving the program more training until it improves. Once it’s better, the improved program replaces the old one on the small device. This process keeps going on to ensure the Edge AI system works well all the time.

Benefits of Edge AI

Edge AI is a subset of AI designed to take advantage of the edge devices in the Internet of Things (IoT). It’s a technique for processing data at the edge rather than in the cloud. It can reduce latency and bandwidth requirements while providing privacy benefits. Here are some examples of how Edge AIs can be used:

Real-Time Latency Reduction

With Edge AI, you don’t need to wait for data to travel from your device to the cloud before processing it. Instead, you can process data locally in real-time and immediately make decisions based on these results.

Local Privacy Enhancement

Edge AI can enhance local privacy by limiting the amount of data that needs to be transmitted over long distances.This benefits consumers who want to maintain their privacy and companies who want to protect their data from hackers.

Bandwidth Optimization

Edge AI allows you to optimize bandwidth usage by sending only relevant information from your device to the cloud or vice versa.This will allow your network to work more efficiently, which means fewer dropped calls and faster download speeds.

AI Training

The edge device can also be used as an additional node in training an artificial intelligence system. Doing so will allow you to train your AI more quickly than if you were only using centralized systems because more connections are available for training purposes.

Offline Functionality

With Edge AI, your devices constantly learn, even when offline or disconnected from the network. This means they can still provide valuable insights when they return online after being unavailable for several days, weeks, or even months.

Reduced Cloud Costs

Cloud costs can be prohibitively expensive for small businesses with limited budgets or companies that need consistent usage patterns.

With edge AI, they can now take advantage of cloud services at a lower cost because they only pay for what they use rather than paying for a full year upfront or unused resources at any given time.

Scalability

Cloud scalability is another major benefit of edge AI as it allows organizations to easily scale up resources during peak periods without investing in new infrastructure or upgrading existing equipment.

This means businesses won’t have to worry about downtime when demand spikes. Instead, they can focus on growing their customer base without worrying about increasing costs due to increased demand on their platforms or applications.

Data Security

Edge AI enables companies to process data at the source rather than sending it to the cloud for processing. As such, it provides a more secure option for handling sensitive information.

When data is processed at its source, hackers have less access to it and so cannot steal or manipulate it.

Real-time Decision-making

Edge AI can also enable real-time decision-making. This is an important aspect of business intelligence because companies need fast responses to events to be competitive and responsive.

Using real-time data analysis and decisions based on current information, businesses can make better decisions without waiting for new information or instructions from central offices.

Contextual Insight

Again, because Edge AI handles data locally, it allows businesses to gain more insight into what’s happening around them at any moment.

This makes it easier for them to respond quickly and intelligently when something happens that requires their attention — like a customer complaint or an equipment failure.

Cloud Computing AI vs. Edge AI

Two of the most exciting and promising technologies are cloud computing and artificial intelligence (AI). The two work together, with AI being a component of cloud computing. But what is the difference between them?

| Cloud Computing AI | Edge AI |

| Higher latency due to centralized processing. | Lower latency and faster response times. |

| Requires more bandwidth for data transmission. | Demands less bandwidth due to local processing. |

| Slower real-time response due to data travel time. | Faster real-time responses by avoiding cloud delays. |

| Raises privacy concerns with external data transfer. | Addresses privacy concerns by processing locally. |

| Energy efficiency might be lower due to remote processing. | Generally more energy-efficient due to local processing. |

| Easily scalable by adding resources to data centers. | Scalability limited by device capabilities. |

| Continuous internet connection required for operation. | Works offline or with intermittent connectivity. |

Edge AI Use Cases

Edge AI is an emerging technology that has several use cases in different verticals, including:

Real-Time Computer Vision in Unmanned Aerial Systems

In an unmanned aerial system (UAS), the camera captures images from which it needs to infer the state of objects around it: whether there are people present or not if there are cars parked on the road, or any obstacles in its path. This information can help determine how to safely navigate an environment without crashing into anything or hurting anyone.

Edge-based Genome Sequencing Analysis

Edge-based Genome Sequencing Analysis is the process of decoding DNA sequences at the point of data generation. To achieve this, we need to use several tools, including:

Deep learning models for gene prediction and variant calling (e.g., for single nucleotide polymorphisms (SNPs), short insertions/deletions (INDELs), structural variants). A distributed computing framework (e.g., Spark) to enable parallelization over multiple machines or devices

Federated Learning for Decentralized Healthcare Diagnostics

Decentralized Healthcare Diagnostics is a new approach toward healthcare that is based on an emerging technology called federated learning. This approach leverages the power of crowdsourced data from many individuals to train deep learning models for personalized healthcare predictions.

It also provides privacy guarantees by encrypting all sensitive information before it gets uploaded to the cloud server. With federated learning, you can train a model using your dataset without storing sensitive information in the cloud server!

High-Frequency Trading Algorithms

High-frequency trading algorithms require real-time information about market movements and transactions. Using edge AI solutions, these algorithms can run their analytics on trading platforms or directly on trading terminals before sending orders back to exchanges for execution. This allows traders to respond faster to changing market conditions to make better trades at lower costs.

Autonomous Navigation in Underground Mining Robots

Edge AI is the next evolution of IoT technology, enabling the collection and analysis of data from sensors at the network’s edge. This is particularly important for autonomous mining robots that operate underground. The data collected by these systems can be transmitted to a cloud-based system where it’s processed and analyzed. The results are then returned to the robot, which uses them to navigate its environment.

Incorporating Kubernetes into Edge AI deployments introduces a transformative paradigm, bolstering efficiency, scalability, and security. Kubernetes optimizes resource allocation, ensuring optimal performance across a spectrum of edge devices.

Its dynamic scaling capabilities empower edge AI applications to adapt seamlessly to evolving workloads, guaranteeing uninterrupted service, even during periods of peak demand. Moreover, Kubernetes’ robust fault tolerance mechanisms minimize downtime, fortifying the reliability of edge AI systems.

Simplifying edge device management, Kubernetes streamlines deployment processes and facilitates centralized monitoring and updates. Crucially, Kubernetes facilitates seamless integration between edge and cloud environments, enabling edge devices to leverage cloud-based services for tasks such as model training and analytics.

With Kubernetes’ advanced security features, including role-based access control and encryption, organizations can safeguard sensitive edge AI data and uphold regulatory compliance. Harnessing the power of Kubernetes in Edge AI not only enhances operational efficiency and scalability but also fortifies data security, driving advancements in AI-driven edge computing.

Incorporating Kubernetes into Edge AI

Incorporating Kubernetes into Edge AI deployments introduces a transformative paradigm, bolstering efficiency, scalability, and security. Kubernetes optimizes resource allocation, ensuring optimal performance across a spectrum of edge devices.

If you want to learn more about how to start with Kubernetes’ dynamic scaling capabilities and empower edge AI applications to adapt seamlessly to evolving workloads, guaranteeing uninterrupted service, even during periods of peak demand, check our guide The Ultimate Guide to Getting Started With Kubernetes [2024 Updated].

Moreover, Kubernetes’ robust fault tolerance mechanisms minimize downtime, fortifying the reliability of edge AI systems. Simplifying edge device management, Kubernetes streamlines deployment processes and facilitates centralized monitoring and updates.

Crucially, Kubernetes facilitates seamless integration between edge and cloud environments, enabling edge devices to leverage cloud-based services for tasks such as model training and analytics. With Kubernetes’ advanced security features, including role-based access control and encryption, organizations can safeguard sensitive edge AI data and uphold regulatory compliance. Harnessing the power of Kubernetes in Edge AI not only enhances operational efficiency and scalability but also fortifies data security, driving advancements in AI-driven edge computing.

Delivering Edge AI with Taikun’s Kubernetes Management Solution

As organizations navigate the complexities of deploying and managing Kubernetes at the edge, Taikun’s CloudWorks Kubernetes Management Solution stands out as an essential solution for both Day 1 and Day 2 operations. Taikun CloudWorks simplifies the intricacies of Kubernetes management, offering a streamlined platform that enhances the deployment, scaling, and security of edge AI applications. By integrating with Taikun CloudWorks, teams can efficiently manage their Kubernetes environments, ensuring that applications are not only deployed with precision but are also maintained with ease, adapting to the ever-changing demands of the edge.

Watch a Short Video of Taikun CloudWorks In Action

Taikun CloudWorks revolutionizes the way organizations approach Kubernetes management, ensuring that the focus remains on innovation and efficiency rather than the complexities of operational management. Whether you are setting up your Kubernetes environment (Day 1) or optimizing and managing it (Day 2), Taikun CloudWorks provides the tools and insights necessary to maximize performance and reliability. Embrace the future of edge computing with Taikun CloudWorks. Start exploring the full potential of your Kubernetes deployments today by signing up for a free trial or scheduling a consultation with our experts.