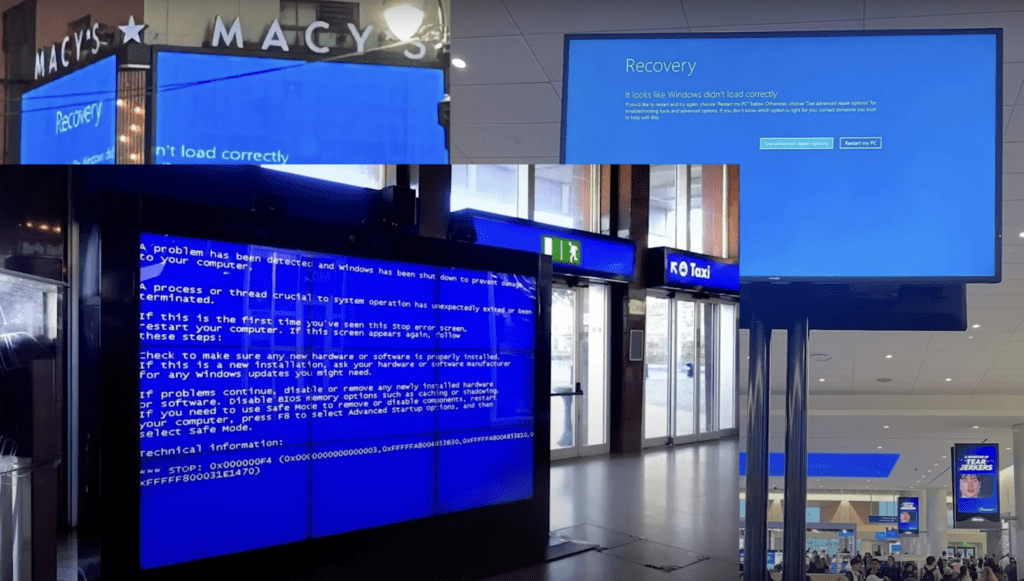

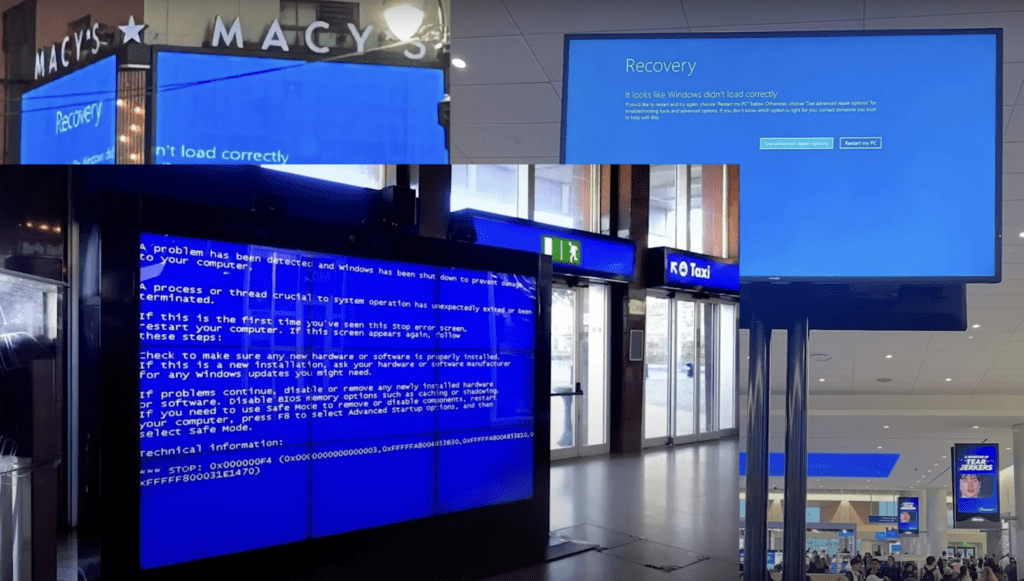

The CrowdStrike Incident: A Global Wake-Up Call for Cloud Resilience

On July 19, 2024, the digital world experienced a seismic shock as a CrowdStrike software update for its software called Falcon Sensor, which scans a computer for intrusions and signs of hacking led to a global outage, affecting countless organizations worldwide. As a Principal Product Evangelist at Taikun, I believe this incident highlights the critical […]

Announcing Our Strategic Partnership with Sardina Systems

We’re thrilled to announce our strategic partnership with Sardina Systems, a leading provider of OpenStack cloud solutions. This collaboration brings together the best of both worlds – Taikun CloudWorks‘ advanced ‘Managed Kubernetes’ and application delivery platform, and Sardina Systems’ FishOS cloud management platform. At Taikun, we’re on a mission to make Kubernetes almost invisible to […]

Demystifying WASM on Kubernetes: A Deep Dive into Container Runtimes and Shims

To understand how to set up and work with WASM on Kubernetes, we must first understand how a container works within the Kubernetes ecosystem.

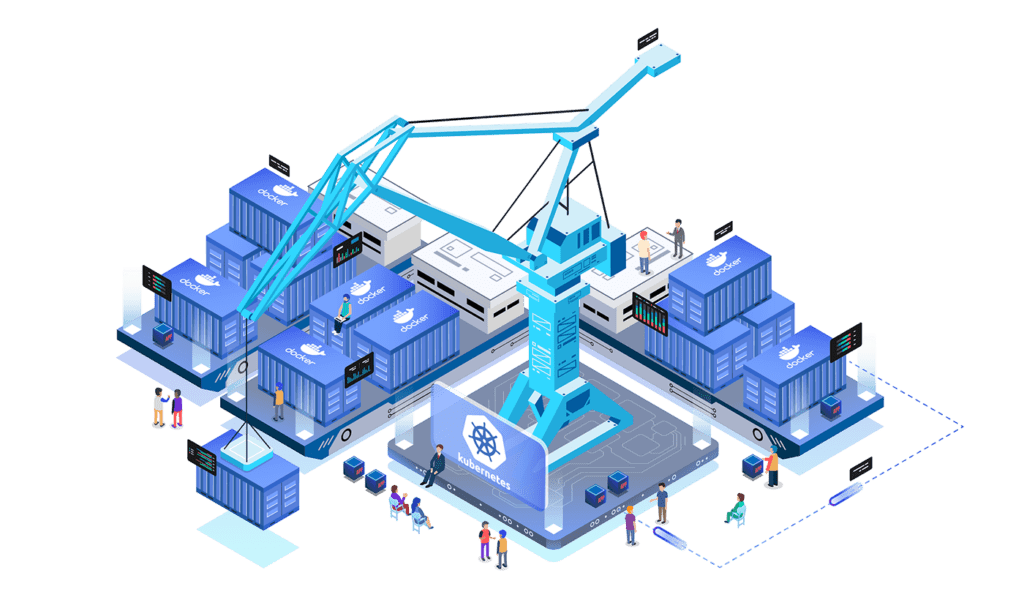

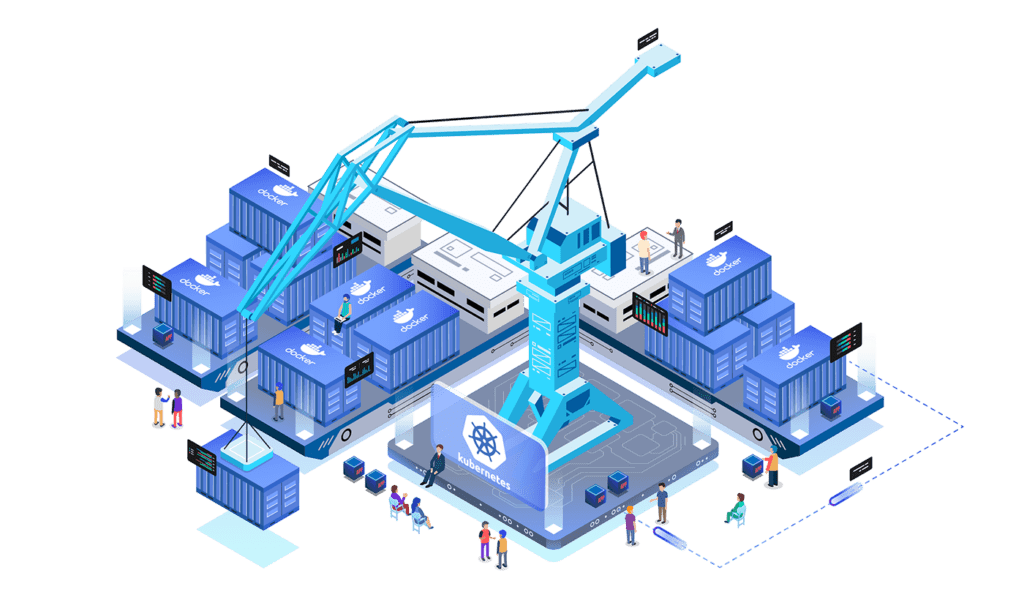

How to seamlessly adopt Kubernetes in your Platform Engineering workflow

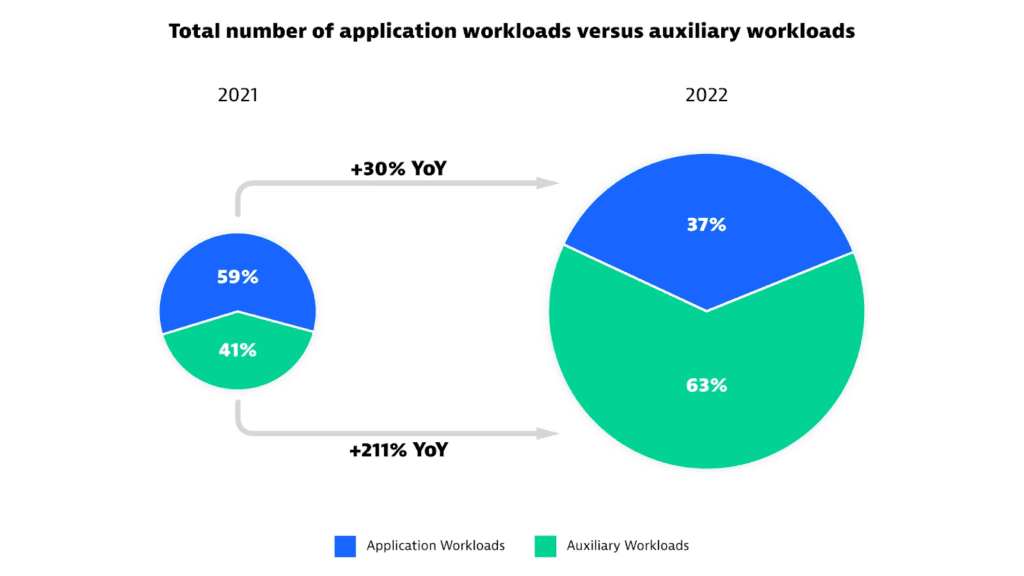

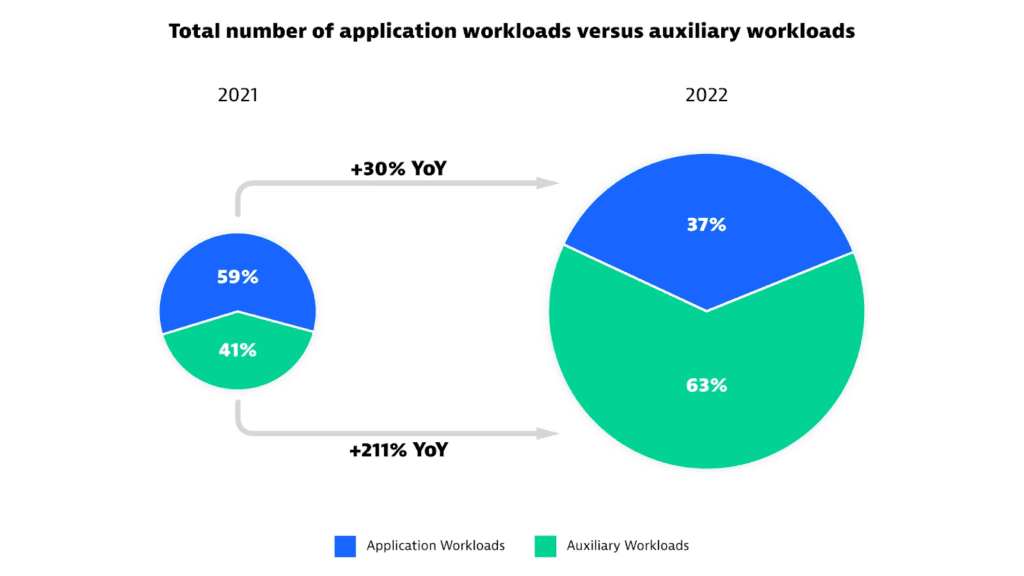

there is a clear preference for Kubernetes-based ecosystems in cloud setups. This is evident from the fact that today, more organizations are adopting Kubernetes for auxiliary workloads.

Platform Engineering on Kubernetes – Architecture and Tooling

As the world moves to a self-servicing platform infrastructure, especially in cloud-native environments, Kubernetes plays a crucial role in building flexible, resilient, and scalable infrastructure.

In this blog, we will take our conversation forward on how Kubernetes ecosystem is helping engineering teams build these cloud-native platforms. We will specifically talk about some of the architecture-related questions that are crucial to answer to build a resilient self-service platform on Kubernetes.

Understanding Platform Engineering in a Kubernetes Ecosystem

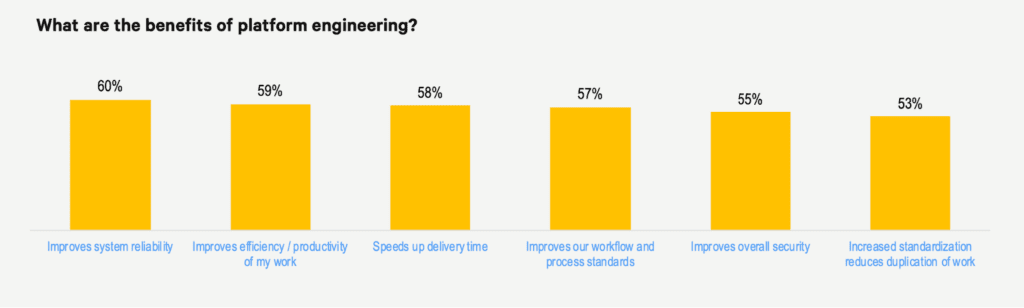

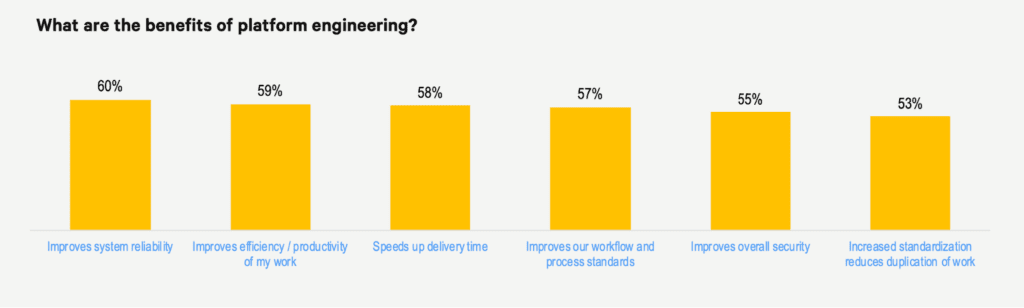

Gartner tagged Platform Engineering as an emerging tech in their annual Hype Cycle in 2022. It has only grown in its presence around the world.

How to Use Kubernetes for Machine Learning and Data Science Workload

This piece will go into Kubernetes’s strengths and how they can be applied to data science and machine learning projects. We will discuss its fundamental principles and building blocks to help you successfully install and manage machine learning workloads on Kubernetes. More over, this article will give essential insights and practical direction on making the most of this powerful platform, whether you’re just starting with Kubernetes or trying to enhance your machine learning and data science operations.

Kubernetes in Production: Tips and Tricks for Managing High Traffic Loads

In today’s digital world, websites and applications are expected to handle a high traffic volume, especially during peak hours or promotional campaigns. When server resources become overwhelmed, it can lead to slower response times, decreased performance, and even complete service disruptions.

How To Run Applications on Top Of Kubernetes

In our series on Kubernetes so far, we have covered an entire gamut of topics. We started with a comprehensive guide to help you get started on Kubernetes. From there, we got into the details of Kubernetes architecture and why Kubernetes remains a pivotal tool in any cloud infrastructure setup. We also covered other concepts in Kubernetes that are useful to know, namely, namespaces, workloads, and deployment. We also discussed a popular Kubernetes command, kubectl, in detail. We also covered how Kubernetes differs from another popular container orchestration tool, Docker Swarm. In this blog, we will consolidate all our learnings and discuss how to run applications on Kubernetes.

Although we covered this topic in bits, we feel this topic deserves a blog of its own.

Kubernetes Deployment: How to Run a Containerized Workload on a Cluster

In this blog, we will discuss Kubernetes deployments in detail. We will cover everything you need to know to run a containerized workload on a cluster. The smallest unit of a Kubernetes deployment is a pod. A pod is a collection of one or more containers. So the smallest deployment in Kubernetes would be a single pod with one container in it. As you would know that Kubernetes is a declarative system where you describe the system you want and let Kubernetes take action to create the desired system.